Improve quality content/Quality control and assurance deep dive

The current state of online quality control

Much of what follows is summarized from "Peer Review Anew: Three Principles and a Case Study in Postpublication Quality Assurance" by Kelty, Burrus, and Baraniuk [1]

According to Kelty, Burrus, and Baraniuk, "The last 15 years have seen major shifts in the nature of knowledge production and circulation. Now modes of authorship, new forms of open licensing and distribution, and new forms of collaboration and peer production have all flourished. New online education projects, scientific journals, and reference works have gained critical mass. But it turn, new anxieties have arisen, especially concerning quality assurance, peer review, reuse, and modification." In short, all of this raises the fundamental question of "how materials produced in a grassroots fashion, by people with varying skill levels and degrees, for widely varied reasons, can be adequately vetted for quality".

In many ways, the authors believe that this anxiety is based on the "largely unexplored assumption that the scholarly publishing infrastructure of the 21st century produced high quality material and that the system of peer review employed therein remains the best system for ensuring quality, if only we can find enough credentialed reviewers." Regardless of how well the 21st century model of peer review worked, however, they argue that it was designed for a smaller scale publication system that was very much focused om academia. Now, we need to take both old and new quality control structures and tools into consideration and "match the novel modes of authorship, reuse, licensing, and distribution of materials with equally novel modes of reviewing, assessing, and sharing evaluations."

In particular, there are three pressure points that need to be explored when thinking about a new approach to quality control and assurance:

- New forms of digital objects (e.g. articles, blog entries, video lectures, digital textbooks) that can easily be updated, reused, or transformed. As the authors state, "Making a digital object widely available, easy to edit, and easy to reuse legally also means that it can be constantly changed. This is both a challenge and an opportunity: it renders the idea of 'once and for all' review problematic but also enables objects to undergo novel forms of 'postpublication' review and improvement"

- The volume of digital content, which is often published before it has undergone any kind of quality control. As the authors explain, "With the advent of 1-click publishing, cart and horse have been reversed: we now face a situation of needing new ways to reject after the fact, or, to put it differently, new ways to make the high quality material stand out above the rest, without simply placing yet more strain on the limited time of people who are deemed the most reliable judges of quality"

- The scope of digital content. In this case, "the growth in the volume of publication is accompanied by an expanding demand for review beyond the narrow demand of scholarly and scientific work. Educational materials, textbooks, reference materials, fiction, film, and music are all increasingly reviewed in some form prior to being officially published, and more often than not volantarily reviewed by peers in the same field or domain."

Interestingy, the authors use Wikipedia as a telling example of how all of these pressures create an inter-related challenge to designing an appropriate quality control and assurance system. As they state, "As the case of Wikipedia demonstrates, not all kinds of information are amenable to the same forms of peer review. The large scale of Wikipedia makes it difficult to handpick reviewers for a Wikipedia encyclopedia entry, but the scope of expertise necessary also outstrips the ability to identify and/or credential a set of appropriate reviewers. In addition, the constantly changing nature of Wikipedia entries renders a 'once and for all review' untenable and less valuable. Wikipedia has created a new kind of reference material as a result; but it has not created a new review process appropriate to this kind of knowledge production."

The authors are also careful to point out that, while providers of online content have the above challenges in common, there is no silver bullet quality control system that is going to work for every situation. Instead, they point out that "quality is not an intrinsic component of the content of a work but rather a feature of how that work is valuable to a specific community of users: its context of use. There is no 'one size fits all' review system that will ensure quality across cutting-edge scientific research, cutting-edge criticism in the humanities, educational resources for high school students around the world, and reference materials like encyclopedias and almanacs."

Different approaches to quality control and assurance

Thus, this wiki page will attempt to provide an overview of the different quality control approaches (both traditional and new) that are being tried in the world of digital content, and to dig deeper into how (and where) those approaches are being applied to the types of content most relevent for Wikimedia: core reference, topical, and education.

Key players

To start with, there appear to be 3 key groups of players who have emerged in the world of online quality control.

- Users - anyone (and in an ideal world, everyone) who accesses information on a site. Users add value around existing content by providing "quality context" based on relevant knowledge and experience. This is often done using Web 2.0 tools, including comments and user ratings and reviews

- Active contributors - a controlled group of trusted users who are given specific, quality-related responsibilities. Active contributors leverage subject and site-specific knowledge and experience and provide "quality guidance" through activities like peer review and selecting content for "featured" or "recommended" status.

- Experts - qualified and verified subject-matter experts who leverage their expertise to bring authority to a site and its content. They serve as the ultimate quality authority, often through providing expert reviews and selecting content for inclusion/deletion.

Overview of different approaches

Leveraging these players has resulted in a spectrum of approaches to quality control. The "expert review" approach is clearly the closest to the review systems described in the overview section above, while "crowdsourcing" is an attempt to overcome the pressure points by leveraging Web 2.0 technology to create a system that is more scalable and works within the confines of easily available resources (average users' knowledge and experience). In many ways, the "active contributor" concept is an attempt to bridge these two ends of the spectrum.

Figure 1 Quality control spectrum

These approaches also communicate very different types of quality assurance to users. As is stated above, which type of approach and assurance is appropriate depends on not only the type of content, but also the context in which it is intended to be used.

Figure 2 Types of quality assurance

Deep dives

Case study of flagged revisions in deWikipedia

Thanks to Church of emacs for helping compile information and perspectives for this case study.

Brief overview

According to the FlaggedRevs report from December 2008, "The German Wikipedia implemented a FlaggedRevs configuration in May 2008. In the German configuration, edits by anonymous and new users have to be patrolled by another longer term editor with reviewer rights before becoming visible as the default revision shown to readers. In the long run, proponents of this system also want to implement the use of FlaggedRevs to validate the accuracy of articles beyond basic change patrolling, but a policy for this use of the technology has not yet been agreed upon" [2]

What flagged revisions is and isn't

The goal of the flagged revisions software is improvement of quality of the wiki content and it allows complex flagging of versions regarding an array of flags. Implemented are the "reviewed" and the "sighted" flag, whereas mostly the "sighted" flag is currently in use on more than twenty WMF wikis and "reviewed" on german Wikiquote. Exact definition of sighted varies slightly from project to project, common is that the version has to be checked for vandalism. By construction, this is done by experienced authors, which automatically adds more value and this is reflected in the definition used on de-WP: "A sighted version is one that an experienced author has checked and it is free of vandalism". On de-WP weblinkspam and libel are factually taken care of significantly better than before.

In addition, it is important to point out that identifying and reverting vandalism is a different goal than stopping it from happening in the first place - "Some people think that FlaggedRevisions can stop vandalism. While there were expectations of that kind in German Wikipedia as well, it has (in my opinion) proven to be wrong. FlaggedRevs are a tool of content validation and reviewing, not a way to fight vandalism. Other tools like AbuseFilter might be better suited for this task. The argument of those people goes as following: "People won't vandalize, because their change won't show up on the page. It will be reverted and until then it is hidden from readers. Metaphorically speaking, for vandals it is not fun to throw a stone through a window, that is not going to break." - The mistake that this argument contains, is that vandals are not rational people. They vandalize and probably aren't fully aware of what they are doing. If they edit, _they_ see their change, even if no one else does. In my experience, vandalism has not decreased notably on German Wikipedia because of FlaggedRevs."

What it takes to make it work

The implementation and ongoing use of flagged revisions does pose a significant burden on members of the community who are involved with flagging (this burden is clearly dependent on whether or not all, or just some, content is placed under some sort of flagged protection) - "Flagging all articles was clearly much work. It took us longer than many predicted; but finally after almost a year, in February 2009 we finished. [3] Another aspect was that flagging all changes by Non-Editors (users who can't flag a page) is a lot of work as well."

Adding to the challenge of finding enough community resources is the fact that checking articles is not very exciting or rewarded - "As reviewing changes is mostly a boring task (most changes are ok; very few have to be reverted) and reviewing doesn't add to you edit count (which seems to be very important for some Wikipedians[4]), so it is not easy to motivate people to review changes. At the moment it seems to work for us, though (perhaps because we don't have the burden of having to glag all 900,000 articles), the current average wait for edits to be reviewed by non-logged-in users is about 75hrs. The median waiting time is less than eight hours. More precise, o33% of all edits are flagged within one or two hours, 50% after 8 hours, 66% after 24. The rest is in the long tail and would wait potentially for ever, therefore there is a special project taking care of the edits that have waited longest, capping the maximal waiting time between two and three weeks.

Community reaction

Here is some information about community engagement around the launch of flagged revisions -"FlaggedRevs had been anounced for quite some time, but a large part of the community found it odd that it was activated without consulting the community beforehand (whether or not they want FlaggedRevs altogether, and if they do, what the favored configuration was). It was anounced that FlaggedRevs on German Wikipedia were activated as part of a test run; therefor there was a consensus not to judge on FlaggedRevs until August, in order to have some data and experience to base the decision on."

An overwhelming number of community members participated in that formal August vote, with the following results: 30.4% Disable FlaggedRevs; 16.6% Keep FlaggedRevs but show latest version to everyone; 59.5% Keep FlaggedRevs, show stable version to readers (not editors). Although the last option didn't get 2/3, it was accepted and most formal discussions on the topic came to an end.

Additional data, resources, and potential implications

Click here for additional statistics about flagged revisions on deWikipedia.

And an additional look at review times can be seen here

Core reference content

The current state of core reference quality control

Given its size and popularity, debates about the Wikipedia approach to quality control and assurance tend to dominate the conversation about quality control for online reference resources in general. There are other approaches being tried in the field, however. In fact, there are several other projects that have been created around a more robust quality control and assurance system and with the explicit purpose of attempting to address what the founders see as weaknesses in Wikipedia's model.

A couple of interesting points to note:

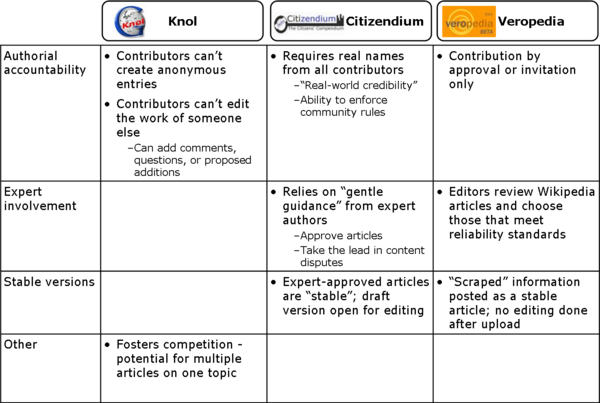

- While the quality control and assurance models that these projects have adopted are all further along the quality spectrum towards expert review, they all take a different approach to authorial accountability and expert involvement. Google Knol is probably the best example of a project that has attempted to maintain some aspects of crowdsourcing

- Some projects are attempting to pull quality control and assurance levers other than expert/sctive contributor review. The best example here is probably attempts to experiment with "stable" vs. "draft" versions of articles.

Approaches being tried in the field

Below is an overview of approaches that other online, mass collaboration encyclopedia projects are taking to quality control. Regardless of how successful these projects have been, it seems like they present the best opportunity to learn about different approaches to quality control and their potential implications on such things as community participation and content quality (breadth, reliability, accuracy, etc).

Figure X Overview of approaches to quality control for online reference

More information about the Citizendium approach to quality control (including arguments for why it will work better than Wikipedia's) can be found here.

Potential implications

There are probably two big categories of potential implications here: Potential options for Wikipedia, and potential impact of more robust quality control and assurance measures on participation and content growth.

There seem to be two ways to think about potential options:

- Wikipedia could look to adopt some of the quality control or assurance measures being tried by other projects. On this front, the sheer number of Wikipedia projects could present the opportunity to experiment with and pilot a variety of options in order to better understand the impact they could have on participation and content quality

- Wikipedia could look to leverage the quality control efforts of other projects. As the Veropedia example illustrates, there are outside efforts underway to improve content created by the Wikipedia community. This could present Wikipedia with a variety of options, ranging from simply doing nothing to re-incorporating improved content to actively partnering with other projects.

The other potential implications surface from the fact that other projects have so far failed to take off and rival Wikipedia in terms of size and popularity. More research would be required to truly understand why this is so, and it seems important to point out that the lack of success does not necessarily mean that the quality control approaches they have taken do not work. It could be a sign, however, that such robust processes can stifle the growth of newer projects, which could present a lesson for smaller Wikipedias. Some of the more mature projects, however, may be at a stage in their development where the application of some of these quality concepts would be more relevant and useful.

Topical content (news and local information)

The current state of topical content quality control

Rise of citizen journalists, without formal training or editor oversight . . .

Shift in editing responsibilities directly to reporters (erasing some of the distinction between reporters and citizen journalists?) . . .

Approaches being tried in the field

New newsroom approaches include: [5]

- Buddy editing - asking a colleague to do a second read

- Back editing - systematic editing of copy after posting

- Previewing - copy goes to a holding directory for an editor to check before live posting

Potential implications

Educational content (OER)

The current state of OER quality control

For an initial discussion of OER and quality, please see the Expanding content - education fact page.

A few points probably bear repeating:

- The quality bar for educational content is high

- Quality in this context has several important components:

- Content - does the material contain the right information on the right topic?

- Context - is the material appropriate for, and relevant to, a specific group of students, in a specific classroom, in a specific school?

- Efficacy - has the material been proven effective?

- In general, there is a lack of systems, process, and tools to help teachers identify and use high quality open content

Approaches being tried in the field

Connexions . . .

Curriki . . .

Other (e.g. MIT OpenCourseware)?