Improve quality content/Opportunities to improve core reference content

Current state of Wikipedia as a core reference

Wikipedia and its core reference content are the cornerstone of the Wikimedia portfolio. The vision for Wikipedia is probably best articulated by Jimmy Wales, who said in 2005:

"Wikipedia is first and foremost an effort to create and distribute a free encyclopedia of the highest possible quality to every single person on the planet in their own language. Asking whether the community comes before or after this goal is really asking the wrong question: the entire purpose of the community is precisely this goal" [1]

Most observers of Wikipedia agree that the project has made what many consider to be surprising progress towards this goal. It has become not only the dominant online encyclopia (the English version has 3M articles, compared with 1.4M available through Oxford Reference Online and 120K for the online version of Encyclopedia Britannica), but is also currently the 5th most popular website.

As researcher Eric Goldman summed it up in a recent white paper, "The English-language version of Wikipedia has made remarkable progress towards this goal. Wikipedia is one of the top 10 most trafficked Internet destinations in the United States; it has generated nearly three million English-language articles since 2001; and its article quality has been compared favorably to the Encyclopedia Britannica, the traditional gold standard of Encyclopedias." [2]

This fact package will attempt to pull together available research and data in order to more clearly define how much progress really has been made toward the goal of creating a high quality encyclopedia, as well as to identify areas where there might still be room for improvement and growth. In particular, it will dig into the following core components of any encyclopedia:

- Breadth: The scope and depth of content covered within the project

- Reliability: The completeness and accuracy of content coverage

- Article quality and quality assurance: The usage of mechanisms to monitor and improve content reliability

Before digging in, it is important to awknowledge that the relative importance of quality may be different for a mature Wikipedia such as the English of German versions than it is for an emerging Wikipedia that is still trying to build a solid base of content. At the same time, quality is always the eventual goal, and it seems likely that all Wikipedias will face similar quality issues as they grow and mature. Relatedly, most of the data and research on these topics is available for only the English Wikipedia. However, as the largest and most mature Wikipedia, the English version may be the most appropriate language Wikipedia to benchmark against both Community-identified goals for content breadth(e.g. vital articles) and traditional resource materials. This comparison can serve as an indicator of where Wikipedia, at its most robust, is highly successful and where opportunities still exist to be the most comprehensive and usable online, free encyclopedia for the world.

Analysis of content breadth

Overview of current content

The biggest advantage Wikipedia has over other online and traditional encyclopedias is the breadth of the content it provides. Wikipedia's mass collaboration model and active community have resulted in an impressive breadth of content spread across 269 language projects.

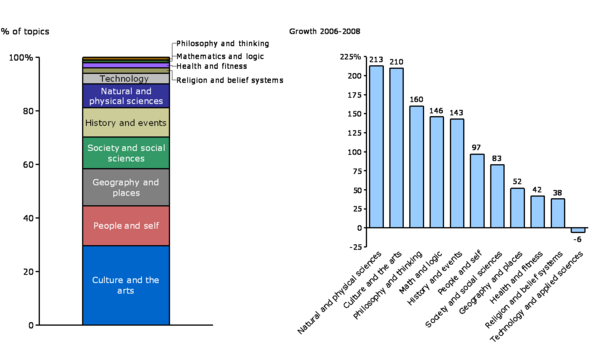

A study by the researchers at PARC [3] provides additional detail about the content breadth of the English Wikipedia. They found information covering 22M categories, which can be grouped into 11 overall topics with the following distribution and growth (2006-2008):

Figure 1 Topic distribution for edWikipedia content

In addition, a high-level analysis of the most popular 100 pages (by page hits) for a sample of other Wikipedia projects demonstrates the variety of information that people around the world are finding on Wikipedia.[4]

Figure 2 Wikipedia page hits by topic

Identification of content gaps

However, for all that we know about what people are accessing on Wikipedia, there is not currently any way to systematically determine what people are searching for but not finding. This is important because, despite the large number of articles that exist on English Wikipedia, there are several available data points that show that there are still content gaps (at least relative to traditional encyclopedias):

- An analysis that started with best-in-class traditional encyclopedias and looked to see which topics were also covered by Wikipedia. Content gaps were found in all three sample subject areas, but the gaps were more pronounced for the humanities [5]

Figure 3 Wikipedia content compared to traditional encyclopedias

- An analysis of the current status (according to Wikipedia's internal quality rating system) of vital articles (a list put together by the community to represent 1,000 articles that any English encyclopedia should have). Overall, over 15% of articles are considered start class and another 10% have a below-average quality rating. There are gaps for all subject areas, but in general they are larger and more pronounced when it comes to humanities and social sciences.[6]

Note: The x-axis shows the relative representation of each subject within the group of vital articles, while the y-axis shows the % of articles within each subject that meet a specific quality rating (see Wikipedia:Quality Rating Scale for additional information on quality ratings)

Figure 4 Vital articles by subject and quality rating

Potential areas to dig deeper

Thus, the data suggests that even a Wikipedia as mature as the English version can not yet be considered "done". However, filling the remaining gaps and challenges will not necessarily be easy to address, and there are several theories about barriers to completion, including:

- The differences in the interests and attention of Wikipedia's contributors lead to some subject areas being better covered than other. Some suggest the lack of diversity among contributors (e.g. over-representation of white males with similar backgrounds) is the driving force behind the strength of content areas like the physcial sciences and the relative weakness of content areas like the humanities and social sciences when measured by vital articles. (add links)

- "The excitement of 'colonizing the frontier' has ended and Wikipedia turns its efforts to cleanup, maintenance, and gradual expansion" [7]

- It is easier for the majority of Wikipedia contributors to write about accessible topics (e.g. pop culture) than topics that are usually the domain of subject-matter experts (e.g. law or medicine). Thus, the lack of experts is the driving force behind the rapid expansion of some subject areas and the slower growth of others

- "The low-hanging fruit has disappeared" (from an interview with a Wikipedia researcher)

- Wikipedia has the contributor base that it needs, but there is no easy and scalable way for those contributors to identify, prioritize, and set their resources against the highest priority content gaps. Thus, Wikipedia needs better systems and tools to help guide content creation (add links)

In order to understand the importance of these, or other, factors, it might be helpful to understand more about:

- The complete picture of what content gaps exist relative to a traditional encyclopedia, as well as what aspects of that missing content would be most valuable to users

- What drives contribution patterns (e.g. how active contributors decide where to go next)

- What systems and tools would make it easier for active contributors or outside experts to easily identify and begin to address content gaps

Analysis of content reliability

Strengths of the Wikipedia model vs. traditional encyclopedias

According to Wikipedia's own entry on encyclopedias:"Some systematic method of organization is essential to making an encyclopaedia usable as a work of reference. There have historically been two main methods of organizing printed encyclopaedias: the alphabetical method (consisting of a number of separate articles, organised in alphabetical order), or organization by hierarchical categories. The former method is today the most common by far, especially for general works. The fluidity of electronic media, however, allows new possibilities for multiple methods of organization of the same content. Further, electronic media offer previously unimaginable capabilities for search, indexing and cross reference"

From an organizational perspective, Wikipedia certainly benefits from its digital, wiki-based model. Not only is content dynamic and current (both in terms of what topics are covered and what information is included), but users have a variety of options when it comes to searching and browsing for content. In many cases, Wikipedia's search-optimized pages mean that users end up at a Wikipedia article without even having to think about it. If they come to Wikipedia directly, they can enter in an article title in the search box (which has been improved to provide context-based suggestions as a user types) or browse through a series of reference lists and indices. Finally, Wikipedia articles themselves provide users with easy access to related internal and external content that can be used for cross-reference and further research.

Identification of reliability gaps

However, other aspects of the Wikipedia model make it difficult for users (especially casual users) to take full advantage of these benefits and easily find the information that they need. There is currently what appears to be a proliferation of reference lists that could be browsed (including overviews, featured content, topic lists, topic portals, glossaries, and timelines) and the lack of a single, internally-consistent classification system has resulted in literally thousands of potential categories an article can belong to and a category-logic that is admittedly not intuitive to the average person.

To illustrate, here are two examples of Wikipedia FAQs on categories: [8]

Why is an article not in a category I would expect?

Articles are not usually placed in every category to which they logically belong. In many cases they will not be placed directly into a category if they belong to one of its subcategories. This is because otherwise categories would become too large, and the list of categories on articles too long. To find the articles you are looking for, it may be necessary to dig down. For example, you won't find w:Oslo listed at the category called Cities, but if you start from there and click "Cities by country", and then "Cities and towns in Norway", you'll have arrived at the right place. Conversely, if you are at the w:Oslo article and you want to find the category of all cities, start by clicking Cities and towns in Norway and navigate up the tree to its parent categories.

Different parts of Wikipedia use different schemes for organizing articles into categories. The main types of categories used are:

- topic categories – categories of articles relating to a particular topic, such as w:Category:Geography or w:Category:Paris.

- list categories – categories of articles on subjects in a particular class, such as w:Category:Villages in Poland.

- list-and-topic categories – categories which are combinations of the two above types.

- intermediate categories – categories used to organize large classes of subcategories, such as w:Category:Albums by artist.

- universal categories – categories used to provide a complete list of articles which are otherwise normally divided into subcategories.

- project categories – categories used mainly by Wikipedia's editors for project management purposes, rather than for browsing. A common type is stub categories, which contain short ("stub") articles in a particular field. You can help build Wikipedia by expanding these stubs into longer articles!

These organizational issues and structural inconsistencies also extend to individual articles. To illustrate this, here are two examples from Featured Articles that are on similar topics and could be expected have at least a common basic structure and categorization: Peregrine Falcon and Albatross

The organization and contents of the Albatross article looks like this:

- Biology

- Taxonomy and evolution

- Morphology and flight

- Distribution and range at sea

- Diet

- Breeding and dancing

- Albatrosses and humans

- Etymology

- In culture

- Birdwatching

- Threats and conservation

- Species

And it is assigned to 6 categories: Albatrosses, Diomedeidae, Bird families, Heraldic birds, Seabirds, and Procellariiformes

However, the organization and contents of the Peregrine Falcon looks like this:

- Description

- Taxonomy and systematics

- Subspecies

- Ecology and behavior

- Feeding

- Reproduction

- Relationship with humans

- Pesticides

- Illegal collectors

- Falconry

- Recovery efforts

- Current status

And it is assigned to 22 categories: Falco, Falcons, Falconry, Cosmopolitan species, Arctic birds, Arctic land animals, Wildlife of the Arctic, African raptors, Birds of Europe, Birds of North America, Birds of Australia, Birds of South Australia, Birds of Tasmania, Birds of Western Australia, Birds of Pakistan, Birds of Serbia, Birds of Chile, Birds of Greenland, Birds of Turkey, British Isles coastal fauna, Birds of Italy

Potential areas to dig deeper

- How can Wikipedia better leverage its technology and platform to ensure at least an internally consistent basic categorization structure?

- How can Wikipedia further improve search functionality to help users quickly find the information they are looking for (e.g. advanced features)?

- What structures and processes would help promote structural consistency within and across articles on similar topics?

Analysis of article quality and quality assurance

Overview of Wikipedia's current approach to quality assurance

Wikipedia's overall approach to quality can be described as "eventualist" - because anyone can edit anything, and can examine its edit history, it will eventually (or already has) become reliable. But how do Wikipedian's decide what is true and what is not?

As Simson Garfinkel (a computer science professor and contributing editor at Technology Review) describes it, "Wikipedia has evolved a radically different set of epistemological standards - standards that aren't especially surprising given that the site is rooted in a Web-based community . . . What makes a fact or statement fit for inclusion is that it appeared in some other publication - ideally one that is in English and available for free online. 'The threshold for inclusion in Wikipedia is verifiability, not truth,' states Wikipedia's official policy on the subject" [9]

This is a direct reference to Wikipedia's core content policies, which together form the basis for how participants decide what information to keep and what to remove. These policies can be summarized as follows:

- Verifiability. As stated on Wikipedia: Verifiability, "The threshold for inclusion in Wikipedia is verifiability, not truth - that is, whether readers are able to check that material added to Wikipedia has already been published by a reliable source, not whether we think it is true". Reliable sources are defined, in general, as "peer-reviewed journals and books published in university presses; university-level textbooks; magazines, journals, and books published by respected publishing houses; and mainstream newspapers. Electronic media may also be used. As a rule of thumb, the greater the degree of scrutiny involved in checking facts, analyzing legal issues, and scrutinizing the evidence and arguments of a particular work, the more reliable the source is".

- Neutral point of view: Wikipedia: Neutral point of view states that, "All Wikipedia articles and other encyclopedic content must be written from a neutral point of view, representing fairly, and as far as possible without bias, all significant views that have been published by reliable sources".

- No original research. Here, Wikipedia: No original research states that "Wikipedia does not publish original research or original thought. This includes unpublished facts, arguments, speculation, and ideas; and any unpublished analysis or synthesis of published material that serves to advance a position. This means that Wikipedia is not the place to publish your own opinions, experiences, arguments, or conclusions".

Current quality assurance measures

Along with these content policies, Wikipedia has also evolved a series of processes and procedures intended to improve quality and make quality processes more transparent to both contributors and casual readers. These include:

- Bots dedicated to reverting vandalism

- Volunteers dedicated to quality assurance tasks (e.g. vandalism, copy editing)

- Editors tagging articles as "controversial" or "needs sources"

- Internal review processes

- Qualified articles being tagged as "Featured" (considered to be the best articles in Wikipedia, as determined by Wikipedia's editors; reviewed as featured article candidates for accuracy, neutrality, completeness, and style according to featured article criteria) or "Good" (considered to be of good quality but which are not yet, or are unlikely to reach, featured article quality)

- Project groups that rate additional articles according to the complete Quality Rating Scale

- See Wikipedia:Featured article review,Wikipedia:Good articles, and Wikipedia:Quality Rating Scale for more information

Current perceived and actual article quality

Despite arguments (especially from academic experts) that a group of millions of volunteers with unknown interests and biases could never reach consensus and produce reliable information, Wikipedia's popularity is often cited as evidence that current policies and processess must work (users perceive Wikipedia to be of high enough quality that they keep coming back). And as the earlier quote from researcher Eric Goldman points out, there is reseach that has shown that Wikipedia's actual quality compares favorably to Encyclopedia Britannica and other best-in-class reference resources. The best known example here is the well-known 2005 Nature Study, which found that Wikipedia's science entries match Britannica's in terms of accuracy (2.92 mistakes per article for Britannica and 3.86 for Wikipedia).

Other researchers point to "surprisingly low" rates of vandalism as evidence that current policies and procedures "seem to work and are fairly effective" (See notes from an interview with Ed Chi). A 2008 study called "The Collaborative Organization of Knowledge" found that "4% of article revisions were tagged in descriptive comment as 'reverts' - a typical response to vandalism. They occured an average of 13 hours after their preceding change. Looking for articles with at least one revert comment, 11% of Wikipedia articles have been vandalized at least once." [10] This seems consistent with recent research done by a member of the Community, which found that: [11]

- Focusing on just this year, vandalism was present .21% of the time (.27% over the entire history of Wikipedia)

- The time distribution of vandalism has a long tail; the median time to revert is 6.7 minutes with a mean time to revert of 18.2 hours (one revert in the sample went back 45 months)

- Nearly 50% of reverts occur in 5 minutes or less

Still, many criticisms of Wikipedia focus on the quality of the content, using arguments that article quality is about more than just factual correctness. Specific popular critcisms (compliments of the Citizendium website) include:

- Too many articles are written amateurishly

- Too many articles are mere disconnected grab-bags of factoids, not made coherent by any sort of narrative

- In some field and topics groups “squat” on topics in order to make them reflect a certain bias

- When experts add obsessive amounts of detail to articles, this can make Wikipedia difficult to read and impossible to verify

- The people with the most influence are those with the most time, not the most knowledge

And taken as a whole, the available research into Wikipedia's quality suggests that it is inconsistent and unreliable across subjects, topics, and individual articles. This research includes:

- Reference Services Review (2008) – “Comparison of Wikipedia and other encyclopedias for accuracy, breadth, and depth in historical articles” [12]

- PC Pro (2007) – Wikipedia Uncovered: Wikipedia vs. The Old Guard [13]

- The Denver Post (2007) – “Grading Wikipedia”

- Nature (2005) – Internet Encyclopedias go head to head

- “Comparing featured article groups and revision patterns correlations in Wikipedia” (2009)

- “Automatically Assessing the Quality of Wikipedia Articles” (2008)

- “Assessing the value of cooperation in Wikipedia” (2007)

- “The Quality of Open Source Production: Zealots and Good Samaritans in the Case of Wikipedia” (2007)

- “An empirical examination of Wikipedia’s credibility” (2006)

Identification of quality assurance gaps

The existence of any inconsistencies in quality, regardless of how widespread, present a challenge for users because (as mentioned before) there is no consistent and reliable way for them to easily recognize the trouble spots and tell the good from the bad. Researchers like Ed Chi have pointed out that few of the aforementioned quality assurance procedures are actually used consistently[14], and the data below highlights how systems like featured article review lack scale and act as a bottleneck to assessing the quality of Wikipedia's ever-expanding supply of content. Here is data on the growth of featured and good articles over time. In general, the number of these community-reviewed articles appears to have not been able to make a dent relative to the growth in the overall number of articles:

Figure 5 Overview of Featured and Good Articles

The previous chart showing the status of vital articles is also relevant here, as it shows how the community has been able get less than 20% of these articles to reach Featured or Good article status. A version of this data is directly referenced in one of the proposals that has been submitted as part of the strategic planning process:

"Wikipedia, at last count, had 2,993,967 articles. This is a staggering number and testiment to the work of many people, however it betrays the fact that we have yet to raise the vital articles, articles that every encyclopedia needs, to a consistent quality. Of the level 1 vital articles, only 1 has reached even "Good article" status. At level 2 the ratio becomes arguably worse.

I think we owe it to ourselves and the status of Wikipedia as a respectable source of information, to improve the vital articles to a respectable level. As much as it may be unpopular, I cannot think of a better way to improve the respectibility and impact of Wikipedia than this."

Analysis of approaches to addressing those gaps

As some Wikipedia projects have seen, however, there is a balance between attempt to address article quality gaps by imposing more robust quality processes and stifling the growth of nascent and emerging Wikipedias. The debate about how to best strike this balance has been a popular topic of conversation recently, driven by the recent news that English Wikipedia will roll out some flagged protection processes on articles about living people.

Eric Goldman writes about this debate in his white paper "Wikipedia's Labor Squeeze and its Consequences", where he describes the "inherent tensions between editability and credibility." As he explains, "Wikipedia has been progressively adding new and additional editing restrictions, which I think is consistent with a macro-trend to slowly 'raise the drawbridge' on the existing site content and suppress future contributions. If so, Wikipedia may be incrementally moving away from free edibility."[15]. Thus, the argument goes, it may not be possible to truly raise the quality bar without having an adverse effect on participating and content contribution.

Flagged revisions

To help understand if this argument has merit, it makes sense to dig deeper into the best example of a Wikipedia implementing a systematic quality assurance engine - German Wikipedia and flagged revisions. According to the FlaggedRevs report from December 2008, "The German Wikipedia implemented a FlaggedRevs configuration in May 2008. In the German configuration, edits by anonymous and new users have to be patrolled by another longer term editor with reviewer rights before becoming visible as the default revision shown to readers. In the long run, proponents of this system also want to implement the use of FlaggedRevs to validate the accuracy of articles beyond basic change patrolling, but a policy for this use of the technology has not yet been agreed upon" [16]

For a more detailed case study on German flagged revisions, please see the deep dive into quality control and assurance.

In addition, click here for a Wikimania 2009 presentation about quality control mechanisms in deWikipedia.

Other tools and initiatives

Community members and researchers have also developed and/or are testing a variety of tools to help improve quality by making it more transparent and reliable, often without having to curb free editability. These tools include:

- Wikitrust - an optional feature that color-codes text based on the reliability of its author and the length of time it has persisted on a page; meant to give readers a better sense of which statements are settles and which have the potential to be controversial or wrong [17]

- WikiDashboard - provides information about edit activity trends, as well as the edit activity of specific users

- Wikibu - uses Wikibu-Points as a "rough indication of the reliability of an article" (points are assigned for criteria such as the number of visitors or references)

Rejected proposals

There are also several proposed initiatives related to quality that have failed over time to get enough community support to become policy. The list can be found here: Wikipedia rejected proposals

Potential areas to dig deeper

- Quality improvement work is difficult, time-consuming, and requires an understanding of Wikipedia's culture and internal processes (e.g. content policies, talk pages, community approach to decision-making). How can Wikipedia make that process more transparent and efficient?

- What is the actual (as opposed to perceived) impact of stregthening quality assurances structures and processes on participation (e.g. content creation, improvement, and maintenance)? What else can Wikipedia learn from existing internal initiatives and external benchmarks?

Notes

- ↑ [1]

- ↑ [2]

- ↑ “What’s in Wikipedia: Mapping Topics and Conflict Using Socially Annotated Category Structure”

- ↑ Data from [3]. Note: "Special", "Portal", and "Wikipedia" pages (e.g. Main Page, Search, Citation Needed) have been removed from these calculations in order to focus in on content that is being viewed. Obvious redirects to other sites (e.g. YouTube, Facebook, Twitter, and MySpace) have also been removed for the same reason

- ↑ Note: Comparisons are to content in Encyclopedia of Physics, Encyclopedia of Linguistics, and New Princeton Encyclopedia of Poetry and Poetics; Alexander Halavais and Derek Lackaff, “An Analysis of Topical Coveragein Wikipedia” (2008)

- ↑ Analysis from data found at Wikipedia:Vital Articles

- ↑ Nate Anderson, "Despite changes, Wikipedia will still 'fail within 5 years'" [4]

- ↑ Wikipedia:FAQ/Categories

- ↑ Garfinkel, Simson L., "Wikipedia and the Meaning of Truth", Technology Review, November/December 2008. http://www.technologyreview.com/web/21558/?a=f

- ↑ Spinellis and Louridas, "The Collaborative Organization of Knowledge", Communications of the ACM, August 2008 [5]

- ↑ The original foundation-l posting is here; More summary data is here

- ↑ [6]

- ↑ [7]

- ↑ [8]

- ↑ [9]

- ↑ [10]

- ↑ [11]